- 194

- 788 719

Machine Learning & Simulation

Germany

–Я—А–Є—Ф–і–љ–∞–≤—Б—П 27 –ї—О—В 2021

Explaining topics of рЯ§Ц Machine Learning & рЯМК Simulation with intuition, visualization and code.

------

Hey,

welcome to my channel of explanatory videos for Machine Learning & Simulation. I cover topics from Probabilistic Machine Learning, High-Performance Computing, Continuum Mechanics, Numerical Analysis, Computational Fluid Dynamics, Automatic Differentiation and Adjoint Methods. Many videos include hands-on coding parts in Python, Julia, or C++. The videos also showcase the application of the topics in modern libraries like JAX, TensorFlow Probability, NumPy, SciPy, FEniCS, PETSc and many more.

All material is also available on the GitHub Repo of the channel: github.com/Ceyron/machine-learning-and-simulation

Enjoy :) And please leave feedback.

If you liked the videos, feel free to support the channel on Patreon: www.patreon.com/MLsim

If you want to make a one-time donation, you can do so via PayPal: paypal.me/FelixMKoehler

------

Hey,

welcome to my channel of explanatory videos for Machine Learning & Simulation. I cover topics from Probabilistic Machine Learning, High-Performance Computing, Continuum Mechanics, Numerical Analysis, Computational Fluid Dynamics, Automatic Differentiation and Adjoint Methods. Many videos include hands-on coding parts in Python, Julia, or C++. The videos also showcase the application of the topics in modern libraries like JAX, TensorFlow Probability, NumPy, SciPy, FEniCS, PETSc and many more.

All material is also available on the GitHub Repo of the channel: github.com/Ceyron/machine-learning-and-simulation

Enjoy :) And please leave feedback.

If you liked the videos, feel free to support the channel on Patreon: www.patreon.com/MLsim

If you want to make a one-time donation, you can do so via PayPal: paypal.me/FelixMKoehler

Unrolled vs. Implicit Autodiff

Unrolled Differentiation of an iterative algorithm can produce the "Curse of Unrolling" phenomenon on the Jacobian suboptimality. How much better is implicit automatic differentiation? Here is the code: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/adjoints_sensitivities_automatic_differentiation/curse_of_unrolling_against_implicit_diff.ipynb

-----

рЯСЙ This educational series is supported by the world-leaders in integrating machine learning and artificial intelligence with simulation and scientific computing, Pasteur Labs and Institute for Simulation Intelligence. Check out simulation.science/ for more on their pursuit of 'Nobel-Turing' technologies (arxiv.org/abs/2112.03235 ), and for partnership or career opportunities.

-------

рЯУЭ : Check out the GitHub Repository of the channel, where I upload all the handwritten notes and source-code files (contributions are very welcome): github.com/Ceyron/machine-learning-and-simulation

рЯУҐ : Follow me on LinkedIn or Twitter for updates on the channel and other cool Machine Learning & Simulation stuff: www.linkedin.com/in/felix-koehler and felix_m_koehler

рЯТЄ : If you want to support my work on the channel, you can become a Patreon here: www.patreon.com/MLsim

рЯ™Щ: Or you can make a one-time donation via PayPal: www.paypal.com/paypalme/FelixMKoehler

-------

вЪЩпЄП My Gear:

(Below are affiliate links to Amazon. If you decide to purchase the product or something else on Amazon through this link, I earn a small commission.)

- рЯОЩпЄП Microphone: Blue Yeti: amzn.to/3NU7OAs

- вМ®пЄП Logitech TKL Mechanical Keyboard: amzn.to/3JhEtwp

- рЯО® Gaomon Drawing Tablet (similar to a WACOM Tablet, but cheaper, works flawlessly under Linux): amzn.to/37katmf

- рЯФМ Laptop Charger: amzn.to/3ja0imP

- рЯТї My Laptop (generally I like the Dell XPS series): amzn.to/38xrABL

- рЯУ± My Phone: Fairphone 4 (I love the sustainability and repairability aspect of it): amzn.to/3Jr4ZmV

If I had to purchase these items again, I would probably change the following:

- рЯОЩпЄП Rode NT: amzn.to/3NUIGtw

- рЯТї Framework Laptop (I do not get a commission here, but I love the vision of Framework. It will definitely be my next Ultrabook): frame.work

As an Amazon Associate I earn from qualifying purchases.

-------

Timestamps:

00:00 Intro

00:00 Recap

01:55 Theory of Implicit Diff

03:32 Compute implicit Jacobian

04:00 Plotting and discussion

06:23 Outro

-----

рЯСЙ This educational series is supported by the world-leaders in integrating machine learning and artificial intelligence with simulation and scientific computing, Pasteur Labs and Institute for Simulation Intelligence. Check out simulation.science/ for more on their pursuit of 'Nobel-Turing' technologies (arxiv.org/abs/2112.03235 ), and for partnership or career opportunities.

-------

рЯУЭ : Check out the GitHub Repository of the channel, where I upload all the handwritten notes and source-code files (contributions are very welcome): github.com/Ceyron/machine-learning-and-simulation

рЯУҐ : Follow me on LinkedIn or Twitter for updates on the channel and other cool Machine Learning & Simulation stuff: www.linkedin.com/in/felix-koehler and felix_m_koehler

рЯТЄ : If you want to support my work on the channel, you can become a Patreon here: www.patreon.com/MLsim

рЯ™Щ: Or you can make a one-time donation via PayPal: www.paypal.com/paypalme/FelixMKoehler

-------

вЪЩпЄП My Gear:

(Below are affiliate links to Amazon. If you decide to purchase the product or something else on Amazon through this link, I earn a small commission.)

- рЯОЩпЄП Microphone: Blue Yeti: amzn.to/3NU7OAs

- вМ®пЄП Logitech TKL Mechanical Keyboard: amzn.to/3JhEtwp

- рЯО® Gaomon Drawing Tablet (similar to a WACOM Tablet, but cheaper, works flawlessly under Linux): amzn.to/37katmf

- рЯФМ Laptop Charger: amzn.to/3ja0imP

- рЯТї My Laptop (generally I like the Dell XPS series): amzn.to/38xrABL

- рЯУ± My Phone: Fairphone 4 (I love the sustainability and repairability aspect of it): amzn.to/3Jr4ZmV

If I had to purchase these items again, I would probably change the following:

- рЯОЩпЄП Rode NT: amzn.to/3NUIGtw

- рЯТї Framework Laptop (I do not get a commission here, but I love the vision of Framework. It will definitely be my next Ultrabook): frame.work

As an Amazon Associate I earn from qualifying purchases.

-------

Timestamps:

00:00 Intro

00:00 Recap

01:55 Theory of Implicit Diff

03:32 Compute implicit Jacobian

04:00 Plotting and discussion

06:23 Outro

–Я–µ—А–µ–≥–ї—П–і—Ц–≤: 671

–Т—Ц–і–µ–Њ

Unrolled Autodiff of iterative Algorithms

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 1,3 —В–Є—Б.28 –і–љ—Ц–≤ —В–Њ–Љ—Г

When you have iterative parts in a computational graph (like optimization problems, linear solves, root-finding etc.) you can either unroll differentiate or implicitly differentiate them. The former has a counter-intuitive Jacobian converge (=Curse of Unrolling). Code: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/adjoints_sensitivities_automatic_differentiation/curse_of_u...

UNet Tutorial in JAX

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 1,2 —В–Є—Б.–Ь—Ц—Б—П—Ж—М —В–Њ–Љ—Г

UNets are a famous architecture for image segmentation. With their hierarchical structure they have a wide receptive field. Similar to multigrid methods, we will use them in this video to solve the Poisson equation in the Equinox deep learning framework. Here is the code: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/neural_operators/simple_unet_poisson_solver_in_jax.ipynb...

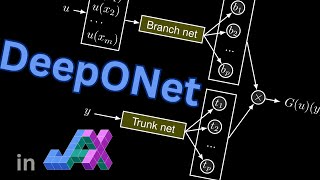

DeepONet Tutorial in JAX

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 2 —В–Є—Б.2 –Љ—Ц—Б—П—Ж—Ц —В–Њ–Љ—Г

Neural operators are deep learning architectures that approximate nonlinear operators, for instance, to learn the solution to a parametric PDE. The DeepONet is one type in which we can query the output at arbitrary points. Here is the code: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/neural_operators/simple_deepOnet_in_JAX.ipynb рЯСЙ This educational series is supported by ...

Spectral Derivative in 3d using NumPy and the RFFT

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 9872 –Љ—Ц—Б—П—Ж—Ц —В–Њ–Љ—Г

The Fast Fourier Transform works in arbitrary dimensions. Hence, we can also use it to derive n-dimensional fields spectrally. In this video, we clarify the details of this procedure, including how to adapt the np.meshgrid indexing style. Here is the code: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/fft_and_spectral_methods/spectral_derivative_3d_in_numpy_with_rfft.ipynb...

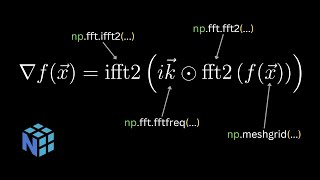

NumPy.fft.rfft2 - real-valued spectral derivatives in 2D

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 5103 –Љ—Ц—Б—П—Ж—Ц —В–Њ–Љ—Г

How does the real-valued fast Fourier transformation work in two dimensions? The Fourier shape becomes a bit tricky when only one axis is halved, requiring special care when setting up the wavenumber array. Here is the notebook: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/fft_and_spectral_methods/spectral_derivative_2d_in_numpy_with_rfft.ipynb рЯСЙ This educational series i...

2D Spectral Derivatives with NumPy.FFT

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 1,1 —В–Є—Б.3 –Љ—Ц—Б—П—Ж—Ц —В–Њ–Љ—Г

The Fast Fourier Transform allows to easily take derivatives of periodic functions. In this video, we look at how this concept extends to two dimensions, such as how to create the wavenumber grid and how to deal with partial derivatives. Here is the notebook: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/fft_and_spectral_methods/spectral_derivative_2d_in_numpy.ipynb рЯСЙ This...

Softmax - Pullback/vJp rule

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 4064 –Љ—Ц—Б—П—Ж—Ц —В–Њ–Љ—Г

The softmax is the last layer in deep networks used for classification, but how do you backpropagate over it? What primitive rule must the automatic differentiation framework understand? Here are the note: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/adjoints_sensitivities_automatic_differentiation/rules/softmax_pullback.pdf рЯСЙ This educational series is supported by the w...

Softmax - Pushforward/Jvp rule

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 3944 –Љ—Ц—Б—П—Ж—Ц —В–Њ–Љ—Г

The softmax is a common function in machine learning to map logit values to discrete probabilities. It is often used as the final layer in a neural network applied to multinomial regression problems. Here, we derive its rule for forward-mode AD. Here are the notes: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/adjoints_sensitivities_automatic_differentiation/rules/softmax_...

Fourier Neural Operators (FNO) in JAX

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 5 —В–Є—Б.5 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

Neural Operators are mappings between (discretized) function spaces, like from the IC of a PDE to its solution at a later point in time. FNOs do so by employing a spectral convolution that allows for multiscale properties. Let's code a simple example in JAX: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/neural_operators/simple_FNO_in_JAX.ipynb рЯСЙ This educational series is ...

Custom Rollout transformation in JAX (using scan)

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 5165 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

Calling a timestepper repeatedly on its own output produces a temporal trajectory. In this video, we build syntactic sugar around jax.lax.scan to get a function transformation doing exactly this very efficiently. Here is the code: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/jax_tutorials/rollout_transformation.ipynb рЯСЙ This educational series is supported by the world-lea...

JAX.lax.scan tutorial (for autoregressive rollout)

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 1,5 —В–Є—Б.6 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

Do you still loop, append to a list, and stack as an array? That's the application for jax.lax.scan. With this command, producing trajectories becomes a blast. Here is the code: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/jax_tutorials/jax_lax_scan_tutorial.ipynb рЯСЙ This educational series is supported by the world-leaders in integrating machine learning and artificial in...

Upgrade the KS solver in JAX to 2nd order

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 7056 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

The Exponential Time Differencing algorithm of order one applied to the Kuramoto-Sivashinsky equation quickly becomes unstable. Let's fix that by upgrading it to a Runge-Kutta-style second-order method. Here is the code: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/fft_and_spectral_methods/ks_solver_etd_and_etdrk2_in_jax.ipynb рЯСЙ This educational series is supported by the...

Simple KS solver in JAX

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 1,8 —В–Є—Б.6 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

The Kuramoto-Sivashinsky is a fourth-order partial differential equation that shows highly chaotic dynamics. It has become an exciting testbed for deep learning methods in physics. Here, we will code a simple Exponential Time Differencing (ETD) solver in JAX/Python. Code: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/fft_and_spectral_methods/ks_solver_etd_in_jax.ipynb рЯСЙ Th...

np.fft.rfft for spectral derivatives in Python

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 9256 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

For real-valued inputs, the rfft saves about half of the computation over the classical fast Fourier transform. Let's use it to speed up the spectral derivative calculation. Here is the code: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/fft_and_spectral_methods/spectral_derivative_numpy_with_rfft.ipynb рЯСЙ This educational series is supported by the world-leaders in integra...

Spectral Derivative with FFT in NumPy

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 2,1 —В–Є—Б.7 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

Spectral Derivative with FFT in NumPy

Physics-Informed Neural Networks in Julia

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 3 —В–Є—Б.8 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

Physics-Informed Neural Networks in Julia

Physics-Informed Neural Networks in JAX (with Equinox & Optax)

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 5 —В–Є—Б.9 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

Physics-Informed Neural Networks in JAX (with Equinox & Optax)

Neural Networks using Lux.jl and Zygote.jl Autodiff in Julia

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 1,9 —В–Є—Б.10 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

Neural Networks using Lux.jl and Zygote.jl Autodiff in Julia

Neural Networks in Equinox (JAX DL framework) with Optax

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 2,2 —В–Є—Б.10 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

Neural Networks in Equinox (JAX DL framework) with Optax

Neural Networks in pure JAX (with automatic differentiation)

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 2 —В–Є—Б.11 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

Neural Networks in pure JAX (with automatic differentiation)

Neural Network learns sine function in NumPy/Python with backprop from scratch

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 1,6 —В–Є—Б.11 –Љ—Ц—Б—П—Ж—Ц–≤ —В–Њ–Љ—Г

Neural Network learns sine function in NumPy/Python with backprop from scratch

Simple reverse-mode Autodiff in Python

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 2 —В–Є—Б.–†—Ц–Ї —В–Њ–Љ—Г

Simple reverse-mode Autodiff in Python

Animating the learning process of a Neural Network

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 2,1 —В–Є—Б.–†—Ц–Ї —В–Њ–Љ—Г

Animating the learning process of a Neural Network

Neural Network learns Sine Function with custom backpropagation in Julia

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 1,2 —В–Є—Б.–†—Ц–Ї —В–Њ–Љ—Г

Neural Network learns Sine Function with custom backpropagation in Julia

Simple reverse-mode Autodiff in Julia - Computational Chain

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 716–†—Ц–Ї —В–Њ–Љ—Г

Simple reverse-mode Autodiff in Julia - Computational Chain

Neural ODE - Pullback/vJp/adjoint rule

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 1,2 —В–Є—Б.–†—Ц–Ї —В–Њ–Љ—Г

Neural ODE - Pullback/vJp/adjoint rule

Neural ODEs - Pushforward/Jvp rule

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 714–†—Ц–Ї —В–Њ–Љ—Г

Neural ODEs - Pushforward/Jvp rule

L2 Loss (Least Squares) - Pullback/vJp rule

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 284–†—Ц–Ї —В–Њ–Љ—Г

L2 Loss (Least Squares) - Pullback/vJp rule

L2 Loss (Least Squares) - Pushforward/Jvp rule

–Я–µ—А–µ–≥–ї—П–і—Ц–≤ 272–†—Ц–Ї —В–Њ–Љ—Г

L2 Loss (Least Squares) - Pushforward/Jvp rule

Python tutorials: import tutorial tutorial.run()

Super cool as always. Some feedback to enhance clarity - when writing modules (SpectralConv1d, FNOBlock1d, FNO1d), overlaying the flowchart on the right hand side to show the block to which the code corresponds would be really helpful. I felt a bit lost in these parts.

Thank you so much for this series! It has helped me tremendously to understand how automatic differentiation works under the hood. I was wondering if you plan to continue the series, as there are still operations you haven't covered. In particular, I am interested in how the pullback rules can be derived for the "not so mathematical" operations such as permutations, padding, and shrinking of tensors.

Ich finds geil wie du from scratch das einf mal so dahincodest und dabei super erkl√§rst рЯШЕ

Just one observation: the functional unconstrained optimization solution gives you an unnormalized function, you said it is necessary to normalize it afterwards. So what does guarantee that this density, but normalized, will be the optimal solution?? If you normalize it, it will not be a solution to the functional derivative equation anymore. Also, the Euler-Lagrange equation gives you a critical point, how does one know if it is a local minima/maxima or a saddle point?

Excellent work! Thanks a lot for sharing.

Thank you. This is super helpful as an intro to fenics for fluid.

Thank you for the video. Very informative and helps a lot. I have a small question: Why there is no density in the equations? In NS equation it is exist. And one bigger question: Is it possible to include the heat transfer into this code with the same scheme as pressure update, or should be there a different way?

whait if the velocity is not constant and changes with the density(q)??

Exam in 20 minutes, thanks haha

Best of luck! рЯШЙ

For full accuracy, at 0:15, the distribution of X is actually the distribution of X given Z=z, right?

I love you

I'm flattered рЯШЕ Glad, the video was helpful

Thank you so much for all you great video. What IDE do you use?

You're welcome рЯ§Ч That's visual studio code.

Hello I'm a undergraduate student from South Korea. I really appreciate your videos. It helps me a lot on understanding jax and programming. Can I know if this 1D turbulence flow has a name?

You're very welcome рЯ§Ч Thanks for the kind feedback. This dynamic is associated with the kuramoto-sivashinsky equation. I'm not too certain if we can classify it as turbulent (depends on the definition), it definitely is chaotic (in the sense of high sensitivity with respect to the initial condition)

Thank you for this wonderful video. Please can you also do another one for a vertical flow for two phase liquid and gas

You're very welcome рЯ§Ч Thanks for the suggestion. It's more of a nichy topics. For now, I want to keep the videos rather general to address a larger audience

This dude is always on point ... keep it coming!

Thanks вЭ§пЄП More good stuff to come.

how do we know the joint dist?

That refers to us having access to a routine that evaluates the DAG. Check out my follow-up video. This should answer your question: ua-cam.com/video/gV1NWMiiAEI/v-deo.html

Very nice video. Truly showing the potential of Julia for sciml! IвАЩm curious have you compared this Julia algorithm with Jax? It seems like much faster than training in Jax. However, IвАЩm also worried about what if I need to construct mlp rather than one layer net which is most common situation in ml? How about high dimensional data rather than 1d data? Does that also increase the complexity to use Julia?

when u say Z is exponential distribution and X is normal distribution, how do u know this? Is this an assumption?

Yes, that's all a modeling assumption. Here, they are chosen because they allow for a closed-form solution.

Thank you very much!

You're welcome! рЯ§Ч

Great stuff

Thanks рЯЩП

Hi this was the most epic explanation I've ever seen, thank you! My question is that at ~14:25, you swap the numerator and denominator in the first term -- why did you do this swap?

Very cool video! The walkthrough write-up of this alternate program of 1D FNO is super useful for newcomers like myself :)

Great to hear! рЯШК Thanks for the kind feedback вЭ§пЄП

Hi man, awesome videos! a problem, if I may. let's say i have a box or cylinder with fluid(1/2, 3/4). i need is to simulate acceleration on it, input(accelerationXYZ, dt) - return inertia feedback foces on XYZ over time. simple detalisation for 300-400fps. maybe bake center mass beheviour into ML or something. any ideas? Thanks!

Not sure if I'm mistaken or not. In your explanation, the formula is wrong since it's actually suppose to use "ln" rather than "log" For others looking at the math part of the explanation np.log is actually doing ln or Log base e got me confused for a while.

Good catch. рЯСН Indeed, it has to be "ln" for the correct box-muller transform. CS people tend to always just write log рЯШЕ

danke! hat bei meinem probablistic ml assignment geholfen!

Super рЯСН Hoffe alles ist gut gelaufen.

Great video on explaining even the math concepts, but I stood with a doubt, perhaps a stupid one: In the beggining of the video you had the blue line p(Z|D) = probability of the latent variable Z knowing D data, so events Z and D are not independent right? If I understood correctly, then, at 10:20, you say that we have the joint probability P(Z *intersect* D). I don't think I understood this: how do we know we have that intersect? Is it explained in any prior minute...? Thank you for your attention

Hi .. was looking for JAX tutorials .. found this video and your channel and hence must say THANKS for the nice explanations.

You're very welcome рЯ§Ч Welcome to the JAX side!

Are you german? Sound very german! Great videos BTW!

Yes рЯШБ Thanks for the kind comment рЯШК

10:27 I don't think it is right. Summation is for the whole (q * p/q), and we cannot conveniently apply summation to just q alone.

Great video! I think at 12:10 it should be inner_objective(gd_iterates,THETA) instead of solution_suboptimality. In this case it is still working since the analytical solution is 0.

Thanks for the kind comment рЯШК. Good catch at 12:10. рЯСН I updated the file on GitHub: github.com/Ceyron/machine-learning-and-simulation/blob/main/english/adjoints_sensitivities_automatic_differentiation/curse_of_unrolling.ipynb

please make a video series on Graph neural network

Hi, thanks for the suggestion. рЯСН Unfortunately, unstructured data is not my field of expertise. I want to delve into it at some point, but for now I want to stick with structured data.

Awesome stuff. I am wondering if you can do a similar video for the new neural spectral methods paper?

Thanks рЯ§Ч That's a cool paper. I just skimmed over it. It's probably a good idea to start covering more recent papers. I'll put it on my todo list, still have some other content in the loop I want to do first, but will come back to it later. Thanks for the suggestion рЯСН

It would be cool here to show analytically that the maximum likelihood estimated parameter for a Bernoulli is just the sample mean of the data :) I guess autodiff is just performing this procedure numerically?

Definitely, autodiff is a bit of an overkill here :D Was more of a showcase how to do this with TFP. In case you are interested in the MLE for Bernoulli: ua-cam.com/video/nTizrDsR1x8/v-deo.html

Really cool stuff, with a clear explanation in 1D, that seems rare. Great work, thanks!

Thanks рЯШК

Amazing expectation thank you so much!

You're welcome рЯ§Ч Thanks for the kind words.

Super interesting observation and super clear explanation! Thanks for sharing it with us!

Thanks for the kind words рЯШК. Much appreciated вЭ§пЄП

This was very useful!! Thank you so much!! Can you please make a video on how to solve helmholtz pde in BEMPP (boundary element method python package) for acoustic simulations?

Thanks for the kind comment вЭ§пЄП you're very welcome. I am not familiar with neither the Helmholtz pde, Nor the bempp package. As far as I see, this Helmholtz pde essentially is a poisson problem. Maybe you find this video of mine helpful: ua-cam.com/video/O7f8B2gPzSc/v-deo.html

姙жЄЕжЩ∞дЇЖ

Thanks for the comment рЯШК I used auto translate (which didn't turn out too well). I hope you liked the video рЯСН

glad i found your channel. great explanationрЯСН

Welcome aboard! рЯШК You're very welcome рЯ§Ч

writing so ugly...

Do you have the code for this saved? If so, could I have it for reference on my own fluid sim?

Nevermind I got it worked out

Great рЯСН Feel free to share your code to help others рЯШК

Can we also use fenics to solve the nonlinear heat equation, i.e. the case were the diffusivity depends on temperature?

I'm pretty sure this is possible. If you check out the fenics documentation you will find a section on solving nonlinear PDEs with newtons method.

Challenging! Can this video solve the problem of finding dA/dќЄ in adjoint optimization for min J(x(ќЄ),ќЄ); s.t. Ax=b;рЯША

Definitely; If you implement the rule as part of your autodiff system you can use gradient-based optimizers to solve the optimization problem рЯШК

6:00 this next step doesn't look correct to me, e.g., if i choose a specific g(u,\theta) = \theta^u, i don't think i would get a sum with these two terms. Am i missing something? The right solution of the derivation of the integrand would have been just \theta^u Your equation peoposes something else

Thanks so much for the video explanation! рЯШН My understanding is that both Lagrangian and implicit derivative methods yield the same sensitivity analysis results, right? The Lagrangian approach is more convenient for large linear equation constrained implementations, as it requires only one forward and one backward propagation computation per iteration. In CFD, sensitivity analysis and adjoint optimization are typically implemented using adjoint equations, right?

You're very welcome рЯ§Ч Yes, both are just two ways to drive the same result.

Do you use fenicsx?

I haven't used it (yet). It's probably to be preferred over the legacy FEniCs.

Hello, thank you for the tutorial. It is very helpful. Could you answer a doubt? In the pressure boundary condition, could I do the Homogeneous Neumann everywhere and specify in a node ( any node inside the domain) pressure =0? Therefore, there aren't infinite solution, but one solution with zero in node which was specified.

You're very welcome. рЯШК Yes, that's one way to fix the additional degree of freedom when solving pressure poisson problems.

Thank you very much for these videos; they are incredible and offer the best explanations of these topics that I've found online. I particularly appreciate the format of your introductions, where you succinctly review prior knowledge and clearly define the key question that the video will explore. My understanding of the Dirichlet distribution is that it serves as a prior for probabilities, with each individual probability constrained to the [0, 1] range. I was wondering if you're aware of any methods to impose specific bounds on individual probabilities within the context of the Dirichlet distribution. For example, suppose you had prior knowledge that the probability of it being sunny could never exceed 0.8; you might want to set its range between [0, 0.8]. Of course, the sum of probabilities would still need to total 1. Is there a known approach or modification to the Dirichlet distribution that accommodates this type of constraint? Many thanks for your help!

Excellent

Thank you! Cheers!